I have a similar problem on Turris Omnia. I do not exceed 300 Mb/s in downloads where the theoretical speed would be 900 Mb/s. In fact, with the operator’s modem I reach ~ 900 Mb/s, but with Omnia the average is just over 200 with peaks of 300 Mb. Omnia is connected directly to the operator’s SFP. So the ISP’s modem is completely replaced. I sent a request with the diagnostic file to the technical assistance e-mail address, hoping that some good guys could give me a hand.

Can it depend on the data collection?

Suricata-based stuff can be CPU-intensive IIRC, e.g. Pakon.

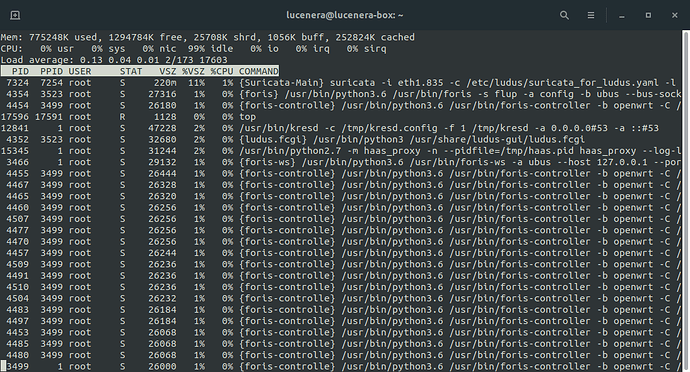

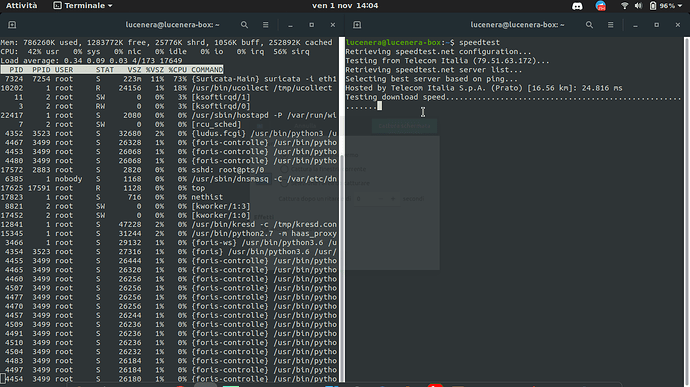

But if I run top from ssh both during speedtest and without speedtest the cpu is at little more than zero per cent of use.

You probably know this already, but due to the way that top reports its number you need to look at the idle percentage, cpu load then ist 100-idle%, on a dual core this typically is scaled so that you either get 50% load for a fully pegged CPU or the numbers go up to 200% ;). In anyway, if any of the CPUs gets close to 0% idle you have a strong indicator for the router being overloaded.

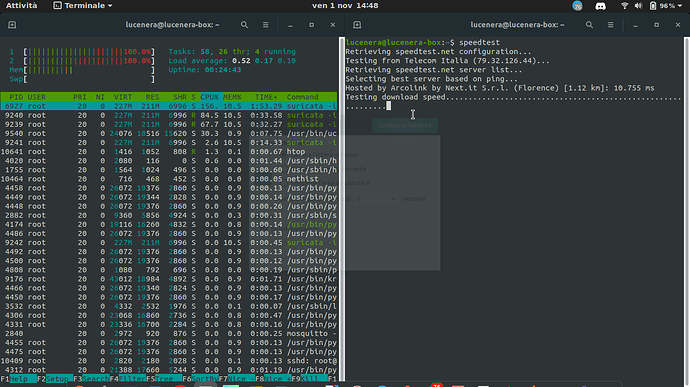

@vcunat maybe he’s right! I show you the top screenshots, one of inactivity, the other during the speed test. In fact during speedtest, idle drops to 0% and “suricata” reaches 73% of use. Can it depend on the data collection? And if this were the case, it would not be appropriate for anyone to activate the data collection, because its connection speed would be strongly affected. Or maybe it affects only from a certain speed onwards.

PS: I activated data collection, ssh honeypot and ludus.

Yes, 73% in suricata seems overloaded. And there’s 56% IRQ, too. I’m actually used to tools like atop and htop, so I don’t know exact top semantics out of the top of my head.

I’d try without ludus. I suspect it does use suricata.

Almost, sirq is soft IRQ context, that as far as I can tell is a construct extensively used in Linux networking to process data in a timely manner, but not any more under the strict constraints that a real interrupt context entails. Anyway for network related things like wifi processing or traffic shaping expect a high sirq percentage…

The 156 CPU% for suricata, tells you that suricata is running on both CPUs, (the maximum here seems to be 100% * Number_of_CPUs). Interestingly, the htop color-bar-per-CPU seem to assign sirq to kernel (red) and not the sirq color (some light blue cyan)…

IRQ: Omnia surely only has single-queue ethernet “cards”. That means all IRQs arrive to a single CPU core, and Linux is distributing that load to multiple cores via the soft-IRQ thing. (That’s way less efficient than multi-queue cards, but better than not distributing at all.)

But in the end what is it that limits my connection to ~ 250 Mb on theoretical 900Mb? Disabling the data collection will you solve the problem according to you?

Suricata certainly seems the bottleneck, but I can’t remember exactly which “features” use that thing (apart from Pakon and maybe Ludus).

The whole data collection part (ssh honeypot and ludus) has been uninstalled and now the values are back to normal. I will do some more tests, but in general the situation is much better.

Mmmh, since I have your attention, I am not sure whether /etc/hotplug.d/net/20-smp-tune (if it exists at all) does the right thing on an omnia, it tries, as far as I can tell to restrict the receive packet steering to the CPU that processes the interrupt which on the omnia with heavy load seems less than ideal (probaly due to what you describe as “all IRQs arrive to a single CPU core”). Manually changing that:

## TURRIS omnia receive scaling:

for file in /sys/class/net/*

do

echo 3 > $file"/queues/rx-0/rps_cpus"

echo 3 > $file"/queues/tx-0/xps_cpus"

done

helps the omnia along to achiever higher performance when using sqm traffic shaping. I wonder whether that should not be done intelligently by 20-smp-tune or a replacement/addition instead. I believe quite a number of ARM soc’s are not showing best performance with the default 20-smp-tune, so the incantation above is showing up on odd places on the openwrt forum.

I don’t have that file. Still, ls /sys/class/net/eth?/queues shows 8 queue pairs on each interface, so perhaps I was hasty about the single-queue assumption. But all of them do have “3” already by default, so that shouldn’t be a blocker.

One thing to note is that each given 5-tuple [(source + dest) * (port + addr) + protocol] gets consistently hashed into a single queue, so trivial benchmarks using a single 5-tuple can’t utilize the advantage. Fortunately, real-life workloads usually don’t look like that.

Very interesting, thanks for sharing your insight. I didn’t realize kernel perf tuning may not apply in this situation.

Mmmh, I will look at my omnia (TOS4) once I am back home, all I know is that when stress-testing sqm I saw these values to be at 2, and setting them to 3 helped, but I might have made errors, so take this with a grain of salt until I rechecked.

My number is from TOS3 Omnia (RC, I think).

EDIT: I suspect in “normal” workloads it will be best to have queues chained to cores, one per core, i.e. one with “2” and one with “1” (and others disabled). (By “one” I mean an RX+TX pair.)

Ah, I believe this is a relative recent addition (to 18.6):

https://git.openwrt.org/?p=openwrt/openwrt.git;a=commit;h=916e33fa1e14b97daf8c9bf07a1b36f9767db679

For TOS4 it would be great if one of your hardware guys could have a look whether that is ideal for the omnia?