After a little more trial and error, I’m even more confused. It turns out some of the traffic speed is different depending on which direction you go (i.e. testing with ‘iperf3 -c host’ vs ‘iperf3 -c host -R’). I was also slightly wrong in my original diagram, in that PC1 ↔ PC2 is 50MB/s. It turns out its actually 100MB/s.

So here’s what I get when I break down transmission speed by direction (arrows show direction of transmission) (I’m leaving off PC4 since the characteristics are identical to PC3):

PC1 ↔ PC2: 100MB/s (in either direction)

PC1 → PC3: 50MB/s

PC1 ← PC3: 100MB/s

PC1 ↔ PC5: 100MB/s (in either direction)

PC2 ↔ PC3: 100MB/s (in either direction)

PC2 → PC5: 50MB/s

PC2 ← PC5: 100MB/s

PC3 ↔ PC5: 100MB (in either direction)

I’m very confused…

On the CPU usage of the Turris Omnia, running htop, I see the system CPU usage get up to 70 or 80 percent, but no individual processes show up with high cpu usage. Earlier when I said I saw [ksoftirqd] I mis-remembered. I do see this process showing up on htop/top when I do bandwidth tests, but it only has 3 or 4 percent CPU usage.

I looked at the bandwidth per interface with ‘bmon’ during these tests. It shows the same bandwidth I’m seeing in iperf3. I thought maybe it had something to do with the fact that lan0, lan2, and lan4 share eth1, and lan1 and lan3 share eth0, but both lan3 and lan4 exhibit the same behavior when communicating with lan0.

Another odd thing is that in the cases where I only have 50MB/s of bandwidth, the number of retransmissions shown by iperf3 is non-zero. It averages around 400 retransmits per second. When the bandwidth is 100MB/s, the number of retransmits is 0 or close to it.

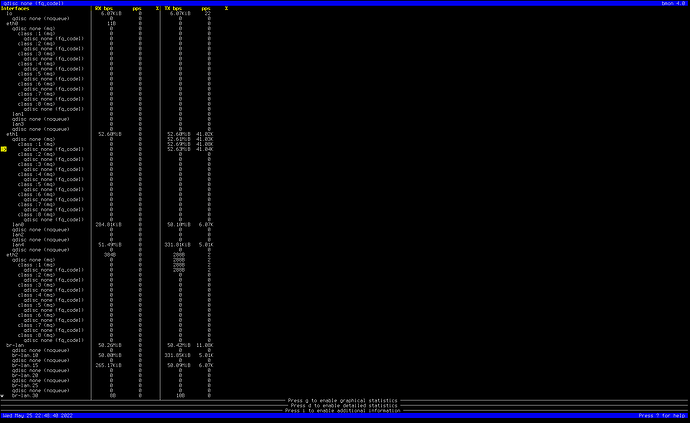

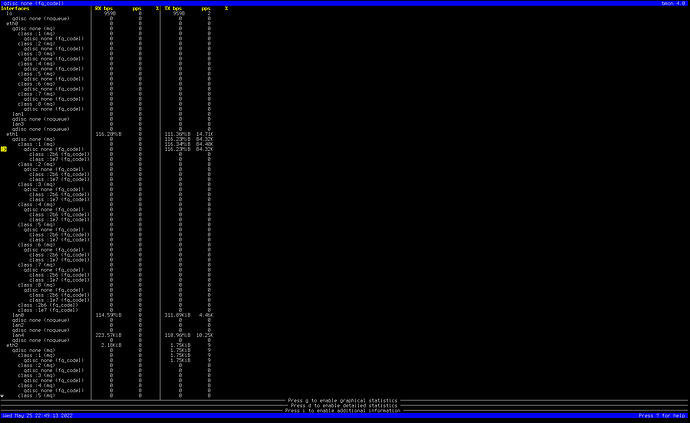

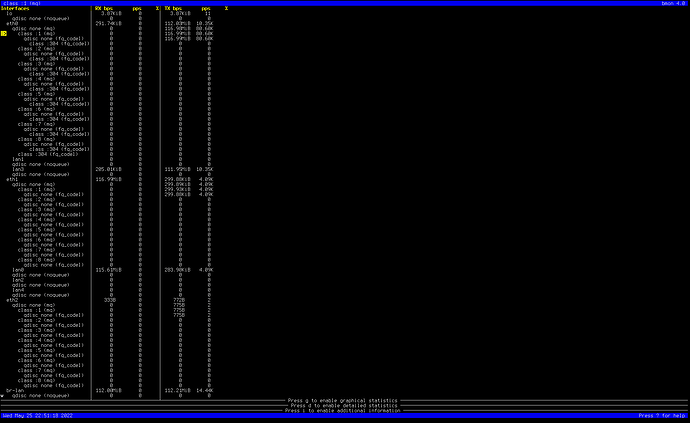

Here’s something interesting that looks suspitious. I looked at the number of packets per second shown in bmon. Looking at eth0 or eth1, when I’m getting 50MB/s, the pps (packets per second) tops out around 41k. When I’m getting 100MB/s, the individual queue inside eth0 or eth1 shows 80k or 90k, but eth0/eth1 itself only shows 14k. I wonder if packets are being fragmented in one direction but not in the other. I re-tested with ‘iperf3 --udp -b 900M’ to see if UDP instead of TCP made a difference, but the results were the same.

I’ve attached some screenshots of bmon in the different scenarios.

PC1 to PC3 (50MB/s)

PC3 to PC1 (100MB/s)

PC2 to PC5 (50MB/s)

PC5 to PC2 (100MB/s)

Any suggestions for what to look at next?