Is it possible to fork a thread?

Anyway, I used the recommended test at Speed test - how fast is your internet? | DSLReports, ISP Information

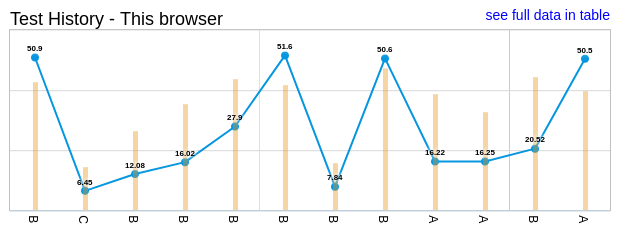

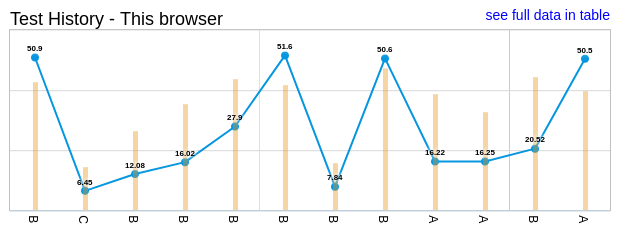

Here are the results. You can clearly see the 4 times where I disabled SQM (the first one, the last one and two in the middle to verify that the line is still Ok).

After enabling SQM again with the above mentioned settings I get attached output of the commands you mention.

Peter

{

"up": true,

"pending": false,

"available": true,

"autostart": true,

"dynamic": false,

"uptime": 1587452,

"l3_device": "eth0",

"proto": "dhcp",

"device": "eth0",

"metric": 0,

"dns_metric": 0,

"delegation": true,

"ipv4-address": [

{

"address": "192.168.2.100",

"mask": 24

}

],

"ipv6-address": [

],

"ipv6-prefix": [

],

"ipv6-prefix-assignment": [

],

"route": [

{

"target": "0.0.0.0",

"mask": 0,

"nexthop": "192.168.2.1",

"source": "192.168.2.100/32"

}

],

"dns-server": [

"192.168.2.1"

],

"dns-search": [

"speedport.ip"

],

"neighbors": [

],

"inactive": {

"ipv4-address": [

],

"ipv6-address": [

],

"route": [

],

"dns-server": [

],

"dns-search": [

],

"neighbors": [

]

},

"data": {

"leasetime": 1814400

}

}

config queue 'eth1'

option interface 'eth1'

option qdisc_advanced '0'

option debug_logging '0'

option verbosity '5'

option qdisc 'cake'

option script 'piece_of_cake.qos'

option linklayer 'atm'

option overhead '44'

option enabled '1'

option download '45000'

option upload '10000'

qdisc noqueue 0: dev lo root refcnt 2

qdisc mq 0: dev eth0 root

qdisc fq_codel 0: dev eth0 parent :8 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :7 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :6 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :5 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

qdisc cake 8035: dev eth1 root refcnt 9 bandwidth 10Mbit besteffort triple-isolate nonat nowash no-ack-filter split-gso rtt 100.0ms atm overhead 44

qdisc ingress ffff: dev eth1 parent ffff:fff1 ----------------

qdisc noqueue 0: dev lan1 root refcnt 2

qdisc noqueue 0: dev lan2 root refcnt 2

qdisc noqueue 0: dev lan3 root refcnt 2

qdisc noqueue 0: dev lan4 root refcnt 2

qdisc noqueue 0: dev lan5 root refcnt 2

qdisc noqueue 0: dev lan6 root refcnt 2

qdisc noqueue 0: dev lan7 root refcnt 2

qdisc noqueue 0: dev lan8 root refcnt 2

qdisc noqueue 0: dev lan9 root refcnt 2

qdisc noqueue 0: dev lan10 root refcnt 2

qdisc noqueue 0: dev lan11 root refcnt 2

qdisc noqueue 0: dev lan12 root refcnt 2

qdisc noqueue 0: dev lan13 root refcnt 2

qdisc noqueue 0: dev lan14 root refcnt 2

qdisc noqueue 0: dev lan15 root refcnt 2

qdisc noqueue 0: dev lan16 root refcnt 2

qdisc noqueue 0: dev br-guest root refcnt 2

qdisc noqueue 0: dev br-lan root refcnt 2

qdisc noqueue 0: dev br-office root refcnt 2

qdisc noqueue 0: dev br-printer root refcnt 2

qdisc noqueue 0: dev br-testing root refcnt 2

qdisc noqueue 0: dev br-wlan root refcnt 2

qdisc cake 8036: dev ifb4eth1 root refcnt 2 bandwidth 45Mbit besteffort triple-isolate nonat wash no-ack-filter split-gso rtt 100.0ms atm overhead 44

qdisc noqueue 0: dev lo root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc mq 0: dev eth0 root

Sent 13985429198 bytes 72307043 pkt (dropped 0, overlimits 0 requeues 1)

backlog 0b 0p requeues 1

qdisc fq_codel 0: dev eth0 parent :8 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :7 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :6 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :5 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :4 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :3 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :2 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

maxpacket 0 drop_overlimit 0 new_flow_count 0 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc fq_codel 0: dev eth0 parent :1 limit 10240p flows 1024 quantum 1514 target 5.0ms interval 100.0ms memory_limit 4Mb ecn

Sent 13985429198 bytes 72307043 pkt (dropped 0, overlimits 0 requeues 1)

backlog 0b 0p requeues 1

maxpacket 1466 drop_overlimit 0 new_flow_count 652 ecn_mark 0

new_flows_len 0 old_flows_len 0

qdisc cake 8035: dev eth1 root refcnt 9 bandwidth 10Mbit besteffort triple-isolate nonat nowash no-ack-filter split-gso rtt 100.0ms atm overhead 44

Sent 100177517 bytes 73927 pkt (dropped 3467, overlimits 214537 requeues 0)

backlog 0b 0p requeues 0

memory used: 792Kb of 4Mb

capacity estimate: 10Mbit

min/max network layer size: 28 / 1500

min/max overhead-adjusted size: 106 / 1749

average network hdr offset: 18

Tin 0

thresh 10Mbit

target 5.0ms

interval 100.0ms

pk_delay 7.5ms

av_delay 4.6ms

sp_delay 14us

backlog 0b

pkts 77394

bytes 105078902

way_inds 96

way_miss 262

way_cols 0

drops 3467

marks 0

ack_drop 0

sp_flows 2

bk_flows 1

un_flows 0

max_len 7590

quantum 305

qdisc ingress ffff: dev eth1 parent ffff:fff1 ----------------

Sent 4355258 bytes 32876 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan1 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan2 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan3 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan4 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan5 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan6 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan7 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan8 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan9 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan10 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan11 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan12 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan13 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan14 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan15 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev lan16 root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-guest root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-lan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-office root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-printer root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-testing root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc noqueue 0: dev br-wlan root refcnt 2

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cake 8036: dev ifb4eth1 root refcnt 2 bandwidth 45Mbit besteffort triple-isolate nonat wash no-ack-filter split-gso rtt 100.0ms atm overhead 44

Sent 4813064 bytes 32874 pkt (dropped 2, overlimits 6791 requeues 0)

backlog 0b 0p requeues 0

memory used: 96768b of 4Mb

capacity estimate: 45Mbit

min/max network layer size: 50 / 1456

min/max overhead-adjusted size: 106 / 1696

average network hdr offset: 14

Tin 0

thresh 45Mbit

target 5.0ms

interval 100.0ms

pk_delay 314us

av_delay 24us

sp_delay 9us

backlog 0b

pkts 32876

bytes 4815522

way_inds 0

way_miss 1

way_cols 0

drops 2

marks 0

ack_drop 0

sp_flows 0

bk_flows 1

un_flows 0

max_len 1470

quantum 1373