- my turris as router (his netmeter) is the fast one (100-120Mbps), while any other device (Mox as sipmle local network host or any notebook [connected on cable]) download is limited to somewhat 23Mbsp.

- I’ve tested both singe client on UTP.

- Firefox and Cromium/chrome (on Ferdora and Win10)

- netmeter.cz is what I use for tests, I can compare it with netmeter results from turris 1.0 (router) and with Mox (ordinary client).

I understand … The problem with testing the connection speed is that both sides of the test are imbezing it. You tried another web speed test too … for the relevance of the results ?.

www.speedtest.ro

www.netmeter.cz

www.speedtest.net … or app Speedtest

Let’s see if the results are similar. As a possible problem, i can still think of qos limits.

Just to make it clear, I have no issue with ISP speed, only when using it through turris, once connected directly to IP eth switch, all the speed test are prefectly OK accoring to service parameters declared by provider. My problem is that when the test is executed on turris itself, the spped is OK, once I try to run the test using any client, it is something like 20-23Mbps.

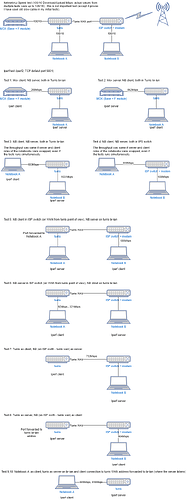

I’ve tried to compose a drawing for tests I’ve done:

On 1Gbps LAN I’ll be happy with whatever speed from 900Mbps, but some of the tests are far from that. At least, running the tests once more, I’ve realized that my initial test with a client downloading data at 20Mbps instead of ~ 100Mbps was some failure on my side (cable…). I’ve used a set of new Cat 5e cables and this seems to resolve one part of my problems, but the results of many other tests are curious to me.

By the way, it looks my MOX is not as good at the network as what I’d expect since same performance figures I was able to observe while connection to the MOX directly from notebook switch and MOX as a client is very slow, but this is a different story, I’m interested in having my Turris in a good shape first.

Thanks for any comments.

Sounds like a reasonable expectation, if the T clients (M & Notebook A) are both connected to T’s LAN ports respectively and with no VLAN tagging or packet mirroring involved (presumably the case).

The data-sheet for the QCA8337N switch chip (in the T) though mentions features of its switch engine (that could come into play):

- QoS engine

- Bandwidth control

- Queue manager

some of which parameters are settable via registers.

With 280 M/s the M as iperf client (via T) this could be attributed to the M as it appears to showing also in this scenario.

If feasible replace the M as as iperf client (via T) with another able/suitable desktop/laptop instance, just to rule out the M.

If you click on the picture, there are 10 tests described, the preview shows only fef of them, so I’ve already did the MOX replacement test.

Missed that, looks like a thorough testing and documentation.

- test 4 & 5 indicate the T’s switch chip not being the cause and performing within reasonable parameters

- test 9 & 10 indicate the T’s WAN port and CPU not being the cause and performing within reasonable parameters on direct ingress/egress

- test 8 indicates the ISP’s CPE probably not being a the cause either (on frame forwarding)

From the remainder (test 6 & 7) it seems that the T is suffering some issue with packet processing on ingress.

Is PAKON installed/running on the T? Is was mentioned in other threads as cause for throughput issues.

Is PAKON installed/running on the T? Is was mentioned in other threads as cause for throughput issues.

Yes PAKON is installed and active, I can deinstall it for testing, it is not critical for me.

Will do the tests later since now the VPN is essential and I have to move physically to Turris to avoid any cable issues…

Thanks for the sugestion!

Yes try this, PAKON will need to touch and process each package passing your router beyong what the network stack usually does. PAKON is great, but like most nice tings it does not come for free, in this case it probably requires more CPU cycles than your router can spare, and once that happens everything slows down.

I’ve unistalled PAKON and tested using a short (2m) cable and clitent PC works well. Sruprisingly, installing PAKON back did not make it any visibly slower, downloads were still a bit above 100 Mbps.

Might it be, that PAKON needed to be reinstalled. The remaining test is to use MOX as client and if it’ll work I had to admit that it works now well, but for no particlular know reason…

tbh: You might be right. ( Performance degradation with pakon or device discovery - #19 by Maxmilian_Picmaus - Omnia HW problems - Turris forum ). And maybe it is not just “pakon” maybe it is all about suricata (after that split to suricata-bin) and related packages/services (pakon, ludus, ids, suricata itself) where you need to remove it and install again. Personally i am not using ids or/and pakon at all. I am having only Ludus. I am suspecting that each package has yaml and it was called by service to load such config (and outdated/obsolete ones might cause issues we faced)

Weird things happen, my result/lessons learned is - do not use Turris 1.0 or Mox as an iperf server nor (definitely not) client.

Being frustrated from all the tests, I’ve replaced RJ45 connectors one by one… and end up with:

- even on 1m long factory made Cat5e cable (including connectors), maximum throughput was 246Mbps (Turris as a client, notebook as an iperf server)

- even on 1m long factory made Cat5e cable, maximum throughput was 272Mbps (Turris as an Iperf server, notebook as an iperf client)

- Omnia (20m Cat5e cable) as client, Turris 1.0 as server 612Mbps

- Turris 1.0 as a client (20m Cat5e cable), Omina as an iperf server 241Mbps

On the other hand when I used two notebooks, one connected using the above factory made 1m Cat5E cable to Turris 1.0 and second one was after 20m Cate5E cable a switch a another 15m of Cat5E cable, the network throughput was 855/905Mbps (changing client/server notebook roles).

Same tests (20m cable, switch) between a notebook and Omnia I’ve got 941/934Mbps.

The sad part of this testing is that Turris MOX is far from being as good as Omnia router, because

Mox an Iperf server and Omnia as an Iperf client (swicth 20m of cable) looks promising 919Mbps, while Omnia as an Iperf server to Mox an Iperf client I’ve got only 310Mbps.

Pakon was de-instaled on all the used routers, Iperf2 was used for tests.

My summary:

Part of the issue was probably caused by a bad RJ45 connector (since after replacing them, I was able to reach expected network throughputs) and long time used Pakon might contribute to the speed degradation as well since I haven’t installed it after replacing cable connectors and some improvement was achieved even before cable tests just by de-installing Pakon.

Turris 1.0 and MOX are not good devices to run iperf for any tests, but the switch chip in Turris 1.0 is capable to handle 1Gbps.

Far as I understand CAT5e is unshielded and max bandwidth of 100 MHz.

Could be interesting to see whether:

- Cat 6 shielded (>250 MHz)

- Cat 6a shielded (500 MHz)

- Cat 7 (600 MHz)

- CAT 7a (1,000 MHz)

- CAT 8.1 (2,000 MHz)

would make a difference, particularly considering unshielded cabling over distances of 20 m.

Also it would be interesting whether the MOX throughput performance improves with the 5.4.x Linux kernel (HBD branch)

Cable Category 5e is the first one supporting gigabit (that not apply to Category 5) WIKI page. IN my case, the 5e cables are already shielded version I know Cat6 has also different mechanical construction, mandatory shielding and different connectors.

I would not change branch on MOX since once I did it last time, it breaks NextCloud certificates (or something else, but existing clients were rejecting connect to it). Since NextCloud is a very bad piece of software and I’ve bought MOX just to be able to abandon DropBox, but it is soo bad I can’t do that (for example, it is possible to upload more than 1 GB video file from a mobile client - it is able to upload files in chunks, but any client I’ve found - tested on Windows and on Linux is not able to download the file in chunks and the NextCloud server tries to load the WHOLE file into memory before sending it to a client - the result on MOX is OOM and reboot, but that is a different story). So if there is someone not suffering from NextCloud open to run a test the dev branch, it’ll be interesting.

Switching to HBD is probably more an issue with potential downtime. One could switch to HBD and test with iperf and afterwards rollback via schnapps to the working snapshot.

When you experienced the low throughput, assuming packets went through the CPU, did you happen to monitor packet statistics (drops, errors, overruns) on the involved interfaces?

Using snapshot for HBD is right point!

Regarding your second question, I did not collected anything more than several tc outputs collected in this discussion earlier. From my point of view it works now (almost gigabit can be reached). I can consider installing Pakon back to the stack to see if it’ll affect network trhoughupt or not (but I assume it can be noticed after several months - if the idea that a stale piece of Pakon config affected router performance on packet inspection, anyway it might be caused by something already removed from present Pakon version, thus not being reproducible).

I got CAT6A - it does not matter what one got.

I got only 917Mbit/sec in iperf 3 - latest TOS (Turris <>switches <>desktop

I even got upgraded in regards of NIC - in my PC now I got Aquantia 10Gbit on Ryzen 3600.

To my NAS I am getting about 980Mbit absolutely stable while transferring files not directly from NAS but from its USB3 which complicates it, but still does not bottleneck anything.

I got new WIFI6 AP - on that with multiple stream (-P option in iperf) I was able to hit 800Mbit/s between Ipad PRO (2021) <> AP <> Switch_1 <>Switch2 <>Desktop Gbit NIC (maybe I could retest with better NIC.

So in conclusion:

- for normal motherboards NIC’s are often not as good as 10 Gbit PCIE versions with more recent chipsets

- Turris has some bottleneck somewhere (maybe as its already quite old CPU it might be limiting factor)

- WIFI6 Devices perform times better while utilizing paralel connections

EDIT: I have just checked and iperf only utilizes omnia at 15%

So most tests like iperf3 report application level throughput, for TCP/IPv4 over ethernet without VLAN tagging the upper limit for the default MTU 1500 is:

1000 * ((1500-20-20)/(1500+38)) = 949.28 Mbps

and even with UDP instead of TCP you will at best see:

1000 * ((1500-20-8)/(1500+38)) = 957.09 Mbps

So not sure on what layer the 980 Mbps where measured reported… but if 1Gbps ethernet was involved, this number is not a goodput number…

You are seeing an efficiency of 100*917/949 = 96.6%, not great, but also not bad. Also, unless you use Turris as fileserver running iperf3 on the turris itself does not test its normal capabilities as router.

Do you have calculation for VLAN tagging?

I have it activated but I am not sure about default interface that this traffic tok place on. I don’t use anything special. VLAN 1 is default VLAN that is active on every interface. Unless I do tagging on switch when necessary.

It is very likely that the traffic on VLAN1 did not use any tagging at all. And as I said that’s the interface I used during testing

A single VLAN tag will be added on top of the payload so add 4 bytes to the overhead of the packet:

1000 * ((1500-20-20)/(1500+38+4)) = 946.82 Mbps

whether the VLAN tag was rate limiting or not, I can not tell for your set-up.

Please note that IPv6 has somewhat lower goodput since the IPv6 header is 40 bytes compared to IPv4’s 20 bytes, if you use rfc1323 timestamps you will also have an additional 12 bytes of overhead:

VLAN+IPv6: 1000 * ((1500-40-20)/(1500+38+4)) = 933.85 Mbps

VAN+IPv6+timestamps: 1000 * ((1500-40-20-12)/(1500+38+4)) = 926.07 Mbps