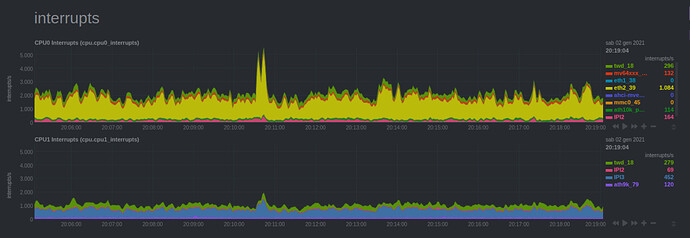

Is there any way to mitigate this situation? Although I have activated irqbalance (downloaded and set to enabled), the load still seems to go almost exclusively on CPU0.

Do you have any reason to believe it’s wrong? maybe 1 core can’t process all interrupts do 2nd core is used but there’s no reason to make all cores work the same.

Do you have any reason to believe this is correct? Sorry, I don’t understand the answer. The optimal from my point of view is the perfect balance of the load, even of the interrupts, between the two cores available. Here it seems to me a very different situation. Help me see more clearly. If you can provide me some links to understand why this is justifiable in a normal condition.

On a quick look the ethernet appears to be just single-channel, e.g. my WAN device shows:

root@turris:~# ethtool -l eth2

Channel parameters for eth2:

Cannot get device channel parameters

: Not supported

I mean… from my point of view, IRQs are just a secondary tweak, first you need to have and utilize multiple channels (or queues or whatever you call it).

This is the typical answer when the HW does not support multiple queues. I haven’t investigated details… but my gut feeling is that for a two-core device it’s not even worthwhile to have a multi-queue HW.

Okay, so are interrupts a consequence?

I think so. I think their (main) usage is to notify OS that a queue is ready (e.g. a packet has arrived into it).

I don’t really know details about how these things works. I mainly worked on optimizing some DNS test cases like https://www.knot-dns.cz/benchmark/ There I think IRQ defaults were good and didn’t need to be changed (for our 10G+ Intel cards at least).

Thanks for your response and availability. I think there is not much to be done on Omnia, but I just wanted to know if it is a normal condition for the device.

I think it’s best if you test whether the CPU time consumed by the interrupts is actually a problem for you or not.

Few days ago I did experiments with Jetson Xavier NX, which is not really a router, but it is ARM and runs Linux. I attached two NICs via M.2 slots and wanted to just do an iperf3 test between them, sending 1 Gbps in both directions. It turned out the CPU was the limiting factor, as I could only get around 600 Mbps bidirectionally. What helped me pinpoint the IRQ issue was looking at htop with detailed CPU usage - soft IRQ time neared 100% on one of its cores, while the other ones were left idle. I also tried to help it with irqbalance, however, for some reason, it did not help much and all soft IRQ load was still being on the one CPU (and that was two physical NICs, so not having support for multiple queues should not be an issue there).

So if you really want to know if you need to dig deeper in IRQ balancing, just run a heavy load of your expected type, spin up htop and look at the soft IRQ times (they displayed as pink-ish (magenta) in my htop).

You may need to configure htop to actually show the SIRQ, see https://openwrt.org/docs/guide-user/network/traffic-shaping/sqm-details#faq:

“Pressing F1 in htop, shows the color legend for the CPU bars, and F2 Setup → Display options → Detailed CPU time (System/IO-Wait/Hard-IRQ/Soft-IRQ/Steal/Guest), allows to enable the display of the important (for network loads) Soft-IRQ category.”

Enabled in htop and can also be monitored from System Overview on netdata. In my case the softirq is on average 1.5% with sporadic peaks at 3%. So from this point of view everything seems to be ok.